FPGA-based Haze Removal Architecture Using Multiple-exposure Fusion

Abstract

Haze removal is useful in vision-based applications such as traffic monitoring and driver assistance systems. Although various methods have been developed, the optimization for real-time operation is still a challenge due to the existence of compute-intensive processes. This paper presents an FPGA-based approach for single-image haze removal that relies on artificially multiple-exposure image fusion and detail enhancement. The Dark Channel Prior assumption is also employed for image blending due to its high efficiency in the haze removal problem. Finally, the proposed method effectively removes haze to produce high-quality images, and shows competitive results through quantitative and qualitative evaluation with other dehazing algorithms. Furthermore, hardware verification with low resource requirements and high processing speed confirms the compatibility of this design with real-time tasks in small devices.

초록

안개제거는 교통 모니터링과 운전 보조 장치들 같은 영상기반 프로그램에 유용하다. 안개 제거는 다양한 방법들이 개발되었지만, 연산 집약적인 과정이 존재하기 때문에 실시간처리를 위한 최적화는 연구가 진행 중이다. 본 논문에는 FPGA 기반의 단일이미지를 위한 안개제거 방법이며 인위적인 다중 노출 영상 합성 및 디테일 향상을 기반으로 한다. 또한, Dark Channel Prior 추정은 안개제거에서 효과적이기 때문에 영상 합성에서 사용됐다. 최종적으로, 제안하는 방법은 안개를 효과적으로 제거하여 고품질의 이미지를 생성하고, 다른 안개제거 알고리즘과의 정량적 및 정성적 평가를 통해 경쟁력 있는 결과를 보여준다. 더 나아가 자원 요구 사항이 낮고 처리속도가 빠른 하드웨어 검증을 통해 소형 기기에서 실시간 처리와 호환성을 확인한다.

Keywords:

haze removal, multiple-exposure, detail enhancement, dark channel prior, image fusion, FPGAⅠ. Introduction

Haze or fog, which is the suspension of microscopic particles in the air, can degrade the value of images in both photography and processing performance. Haze removal or dehazing is a process that minimizes this adverse impact. Recently, single-image dehazing draws more attention than the multi-image haze removal technique since it does not require rich knowledge of the scene. Several approaches in this research direction were proposed with remarkable results. He et al.[1] introduced the famous Dark Chanel Prior (DCP) to estimate atmospheric light and transmission map. Zhu et al.[2] presented the Color Attenuation Prior to model the scene depth as a function of brightness and saturation. Galdran et al.[3], from another point of view, applied multi-scale fusion to a set of artificially under-exposed images. However, the costly computations such as depth map refinement in[1], large guided filter in[2], and Laplacian pyramid fusion in[3] still limit their computational efficiency. In this paper, we propose an FPGA-based method for single-image dehazing[4] that relies on multi-exposure image fusion. This algorithm is obtained by analyzing the disadvantages of the existing work from Galdran et al.[3] when being executed on hardware, and presenting uncomplicated replacements. Thus, the hardware resource consumption is reduced while still achieving impressive dehazing performance and high processing throughput. In this paper, Section II details the algorithm, Section Ⅲ refers to the hardware design, Section Ⅳ evaluates the method, and Section Ⅴ concludes the study.

Ⅱ. Proposed Algorithm

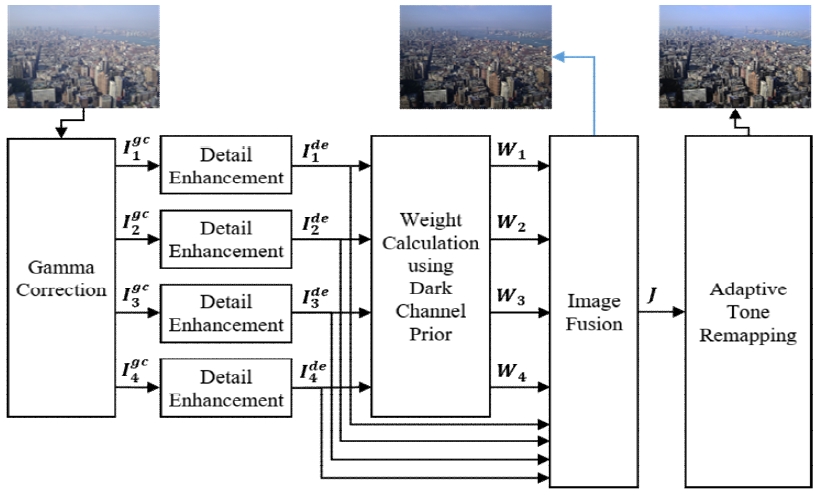

Fig. 1 shows the overall block diagram of the proposed algorithm. The primary mechanism of our method is to generate a set of images at different exposures, enhance them, then combine the well-exposed regions within each image to build a dehazed photo.

It has been proved that pixels in a haze-free image usually have lower intensity than that in its hazy version[3]. Thus, haze removal techniques typically result in a decrease in pixel intensity. Accordingly, under-exposed images are required for dehazing purpose in this work. Gamma correction is employed to generate the expected under-exposure set since it is one of the simplest methods to manipulate exposure. Also, it is necessary to include the originally foggy image because it may contain haze-free areas.

Therefore, the formula should be:

| (1) |

where (x,y) denotes the coordinates, I is the hazy image, and γ is the gamma value.

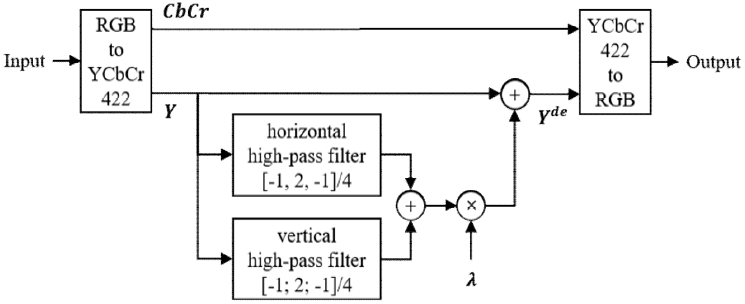

Under-exposing using gamma correction, on the other hand, damages details of the final result due to the global decrease in intensity. The proposed solution is applying detail enhancement, which is described in Fig. 2, to each sub-image. This process is executed in YCbCr color space with 4:2:2 format[5], where the horizontal chroma resolution is halved. Since the human eye is more sensitive to variations in luminance than chrominance, it is only needed to boost the details in the luminance channel. Second-order Finite Impulse Response filter is employed to simplify the design. The gain value λ determines how much the edges would be intensified.

From[3], image fusion can be performed as:

| (2) |

where J is the dehazed image, K is the total number of images in the multi-exposure set, Ide denotes a sub-image after being detail-enhanced, and W is the corresponding weight.

Despite reducing the haze, under-exposing darkens most of the regions. Therefore, the weight set should be capable of selecting the parts with minimum haze density while still keeping the naturally clear areas. We propose to calculate the weights based on the Dark Channel Prior[1]. This assumption indicates the existence of dark pixels that have very-low intensity in at least one color channel within every local area of haze-free scenes that do not include sky region. The DCP is applied to each image in Eq. (2) to derive the corresponding dark channel that is built from the dark pixels. The regions with lighter haze will map to low-intensity pixels in its dark channel. Initial weight is then computed by inverting the obtained dark channel as below:

| (3) |

where Wdcp is the initial weight, refers to a color channel of Ide, and (i,j) denotes the coordinates of pixels within the local image patch Ω centered at (x,y). In this way, low-haze zones will take high proportions in contribution to the dehazed image. The final weight W used in Eq. (2) is the normalized value of initial weight to keep the value of J in the possible range.

Haze removal always results in a dark image due to intensity decreasing. Thus, Adaptive Tone Remapping (ATR)[6] is employed as post-processing to improve both luminance and color.

This method generates a nonlinear curve for the tone mapping according to the pixel distribution and the luminance average of the image. The curve’s values rise in low-light regions and fall in high-light ones. Specific formulas can be found in[6].

Ⅲ. Hardware Implementation

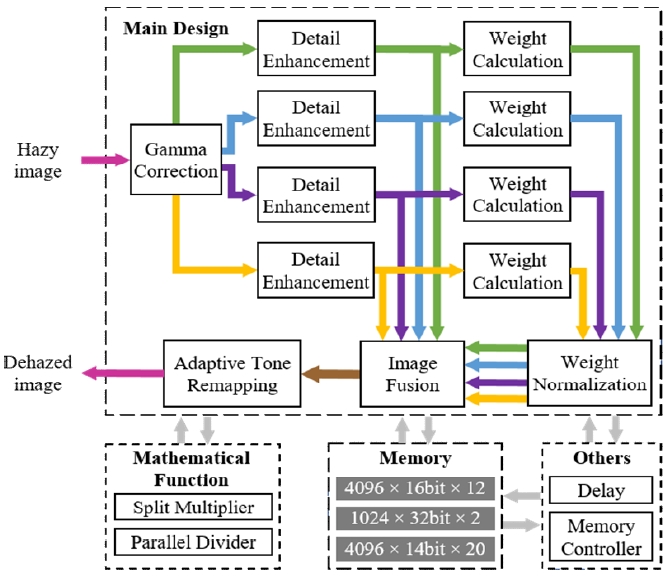

Fig. 3 presents the architecture of the proposed algorithm. A total of 34 memory blocks are used, in which 4096-depth memories are employed for haze removal computation. This hardware design is modeled in Verilog hardware description language and is implemented on the Xilinx ZC706 evaluation board[7] with the results shown in Table 1. The entire haze removal system consumes only 7% of registers, 17% of lookup tables, and 12% of available memories.

The processing speed in frame per second (fps) can be calculated as:

| (4) |

where p is the processing speed, fmax denotes the maximum operating frequency, W is the width of image, H is the height of image, HB refers to the horizontal blank, and VB denotes the vertical blank.

Maximum processing speed, which is achieved when HB and VB are both equal to 1, is computed with different frame sizes and shown in Table 2. The design can handle up to 88.6 fps for Full HD videos and 29.9 fps for the UW4K standard.

Ⅳ. Evaluation

Performance is evaluated by comparing the proposed algorithm to previous research in[1]-[3]. O-HAZE and I-HAZE datasets[8], which contain outdoor and indoor pairs of hazy and haze-free images, are used in this section.

Fig. 4 provides an image containing thick fog, its haze-free scene, and dehazed results from all considered methods for qualitative evaluation. The methods of Zhu et al. and He et al. failed to eliminate the haze and suffered from color distortion. Galdran et al.’s technique was able to recover the scene. Its included histogram equalization successfully kept all regions away from becoming too dark. However, it looks like the whole image is still covered by thin haze, and its contents are blurred. Our algorithm, on the other hand, produced a clearer and brighter dehazed image. Despite the remaining light fog in the center, details in that region were not affected thanks to the detail enhancement process.

Four metrics are used for quantitative assessment. Mean Square Error (MSE) indicates the difference between the target image and its reference. The lower the MSE value is, the lower the error is. Structural SIMilarity (SSIM)[8] assesses the degradation in terms of luminance, contrast, and structure. Tone Mapped Image Quality Index (TMQI)[8] evaluates the structural information preserving characteristics. Feature SIMilarity Index extended to color image (FSIMc)[8] quantifies image quality based on salient low-level and chromatic features. The values of SSIM, TMQI, FSIMc range between 0 and 1, in which the higher, the better.

In our simulation, the values of γ are 1, 1.9, 1.95, and 2, while the values for λ are 1, 1.5, 2, and 2.5, respectively. The average scores when comparing dehazed scenes to corresponding haze-free images in O-HAZE and I-HAZE datasets are provided in Table 3, where the bold number represents the best value in each field.

The proposed method obtains the best results in terms of MSE, SSIM, and TMQI because these three metrics are designed to evaluate grayscale images, where the detail enhancement successfully preserved the majority of details and structure. However, removing too much haze leads to the loss of information in some dark regions, which explains the approximation in the SSIM score between our method and Galdran et al.’s. Under the FSIMc metric, which assesses images in respect of chrominance, our approach is the second-best thanks to the color emphasis function of the ATR post-processing.

Ⅴ. Conclusion

A simple but efficient single-image haze removal method for FPGA implementation has been proposed. This technique is the combination of multi-exposure image fusion guided by the Dark Channel Prior, and detail enhancement. It was able to achieve impressive results in both qualitative and quantitative aspects when comparing to other studies. The hardware architecture was compact due to the cutbacks in costly computation, while still capable of processing UW4K videos at 29.9 fps throughput.

Acknowledgments

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2015R1D1A1A01060427)

References

-

K. He, J. Sun, and X. Tang, "Single Image Haze Removal Using Dark Channel Prior", IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 33, No. 12, pp. 2341-2353, Dec. 2011.

[https://doi.org/10.1109/TPAMI.2010.168]

-

Q. Zhu, J. Mai, and L. Shao, "A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior", IEEE Transactions on Image Processing, Vol. 24, No. 11, pp. 3522-3533, Nov. 2015.

[https://doi.org/10.1109/TIP.2015.2446191]

-

A. Galdran, "Image dehazing by artificial multiple-exposure image fusion", Signal Processing, Vol. 149, pp. 135-147, Aug. 2018.

[https://doi.org/10.1016/j.sigpro.2018.03.008]

- Q. H. Nguyen and B. Kang, "Single-image Dehazing using Detail Enhancement and Image Fusion", The Fifth International Conference on Consumer Electronics (ICCE) Asia, Seoul, Nov. 2020.

- Keith Jack, "Video Demystified: A Handbook for the Digital Engineer 3rd edition", LLH Technology Publishing, May 2001.

-

H. Cho, G. J. Kim, K. Jang, S. Lee, and B. Kang, "Color Image Enhancement Based on Adaptive Nonlinear Curves of Luminance Features", Journal of Semiconductor Technology and Science, Vol. 15, No. 1, pp. 60-67, Feb. 2015.

[https://doi.org/10.5573/JSTS.2015.15.1.060]

- Zynq-7000 SoC Data Sheet: Overview (DS190), https://www.xilinx.com/support/documentation/datasheets/ds190-Zynq-7000-Overview.pdf, . [accessed: Mar. 18, 2020]

-

D. Ngo, G. D. Lee, and B. Kang, "Improved Color Attenuation Prior for Single-Image Haze Removal", Applied Sciences, Vol. 9, No. 19, p. 4011, Jan. 2019.

[https://doi.org/10.3390/app9194011]

2016 : BS degree in Department of Electronic and Telecommunication Engineering, University of Danang.

2019 ~ present : Pursuing MS degree in Electronic Engineering, Dong-A University.

Research interests : VLSI architecture design, and image processing

1985 : BS degree in Electronic Engineering, Yonsei University.

1987 : MS degree in Electronic Engineering, Pennsylvania University.

1990 : PhD degree in Electrical and Computer Engineering, Drexel University.

1989 ~ 1999 : Senior Staff Researcher, Samsung Electronics.

1999 ~ present : Prof. of Dept. Electronic Engineering, Dong-A University.

Research interests : image/video processing, pattern recognition, and SoC designs